(Geneva) – Basic humanity and the public conscience support a ban on fully autonomous weapons, Human Rights Watch said in a report released today. Countries participating in an upcoming international meeting on such “killer robots” should agree to negotiate a prohibition on the weapons systems’ development, production, and use.

The 46-page report, Heed the Call: A Moral and Legal Imperative to Ban Killer Robots, finds that fully autonomous weapons would violate what is known as the Martens Clause. This long-standing provision of international humanitarian law requires emerging technologies to be judged by the “principles of humanity” and the “dictates of public conscience” when they are not already covered by other treaty provisions.

“Permitting the development and use of killer robots would undermine established moral and legal standards,” said Bonnie Docherty, senior arms researcher at Human Rights Watch, which coordinates the Campaign to Stop Killer Robots. “Countries should work together to preemptively ban these weapons systems before they proliferate around the world.”

The 1995 preemptive ban on blinding lasers, which was motivated in large part by concerns under the Martens Clause, provides precedent for prohibiting fully autonomous weapons as they come closer to becoming reality.

The report was co-published with the Harvard Law School International Human Rights Clinic, for which Docherty is associate director of armed conflict and civilian protection.

More than 70 governments will convene at the United Nations in Geneva from August 27 to 31, 2018, for their sixth meeting since 2014 on the challenges raised by fully autonomous weapons, also called lethal autonomous weapons systems. The talks under the Convention on Conventional Weapons, a major disarmament treaty, were formalized in 2017, but they are not yet directed toward a specific goal.

Human Rights Watch and the Campaign to Stop Killer Robots urge states party to the convention to agree to begin negotiations in 2019 for a new treaty that would require meaningful human control over weapons systems and the use of force. Fully autonomous weapons would select and engage targets without meaningful human control.

To date, 26 countries have explicitly supported a prohibition on fully autonomous weapons. Thousands of scientists and artificial intelligence experts, more than 20 Nobel Peace Laureates, and more than 160 religious leaders and organizations of various denominations have also demanded a ban. In June, Google released a set of ethical principles that includes a pledge not to develop artificial intelligence for use in weapons.

At the Convention on Conventional Weapons meetings, almost all countries have called for retaining some form of human control over the use of force. The emerging consensus for preserving meaningful human control, which is effectively equivalent to a ban on weapons that lack such control, reflects the widespread opposition to fully autonomous weapons.

Human Rights Watch and the Harvard clinic assessed fully autonomous weapons under the core elements of the Martens Clause. The clause, which appears in the Geneva Conventions and is referenced by several disarmament treaties, is triggered by the absence of specific international treaty provisions on a topic. It sets a moral baseline for judging emerging weapons.

The groups found that fully autonomous weapons would undermine the principles of humanity, because they would be unable to apply either compassion or nuanced legal and ethical judgment to decisions to use lethal force. Without these human qualities, the weapons would face significant obstacles in ensuring the humane treatment of others and showing respect for human life and dignity.

Fully autonomous weapons would also run contrary to the dictates of public conscience. Governments, experts, and the broader public have widely condemned the loss of human control over the use of force.

Partial measures, such as regulations or political declarations short of a legally binding prohibition, would fail to eliminate the many dangers posed by fully autonomous weapons. In addition to violating the Martens Clause, the weapons raise other legal, accountability, security, and technological concerns.

In previous publications, Human Rights Watch and the Harvard clinic have elaborated on the challenges that fully autonomous weapons would present for compliance with international humanitarian law and international human rights law, analyzed the gap in accountability for the unlawful harm caused by such weapons, and responded to critics of a preemptive ban.

The 26 countries that have called for the ban are: Algeria, Argentina, Austria, Bolivia, Brazil, Chile, China (use only), Colombia, Costa Rica, Cuba, Djibouti, Ecuador, Egypt, Ghana, Guatemala, the Holy See, Iraq, Mexico, Nicaragua, Pakistan, Panama, Peru, the State of Palestine, Uganda, Venezuela, and Zimbabwe.

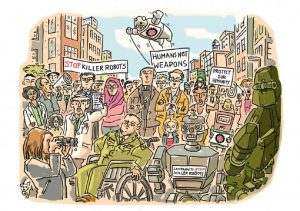

The Campaign to Stop Killer Robots, which began in 2013, is a coalition of 75 nongovernmental organizations in 32 countries that is working to preemptively ban the development, production, and use of fully autonomous weapons. Docherty will present the report at a Campaign to Stop Killer Robots briefing for CCW delegates scheduled on August 28 at the United Nations in Geneva.

“The groundswell of opposition among scientists, faith leaders, tech companies, nongovernmental groups, and ordinary citizens shows that the public understands that killer robots cross a moral threshold,” Docherty said. “Their concerns, shared by many governments, deserve an immediate response.”

Human Rights Watch.Killer Robots Fail Key Moral, Legal Test © 2018 by Human Rights Watch.